I haven't met any of the tasks I set myself based on my last year in review. But as life springs up random surprises, you see yourself shifting to a moment in time that you never thought was conceivable.

If someone were to tell me last year that 2021 would be the year I'd find someone and finally settle down, I'd say you've been drinking too much of the finest Rioja.

When such a shift in one's life happens, this takes utmost priority and as a result, my blogging has taken a backseat. After April things have been a little sporadic - a time when the new stage in my life kicked up a notch.

Even though blogging this year hasn't been a priority, it's not a result of a lack of learning. I've just been focusing on learning some new life skills during this new stage in my life as well as keeping on top of new technological advances within a work environment on a daily basis.

2021 In Words/Phrases

Coronavirus, Covid-19, Omicron, Hubspot, Wedding, No Time To Die, Money Heist, Tailwind CSS, Prismic, Gatsby Prismic, Beard, Azure, Back The Gym, Blenheim Light Show, Camping, Abingdon Fireworks, New Family/Friends

My Site

Believe it or not, I have been working on an updated version by completely starting from scratch. This involved updating to the latest Gatsby framework and redoing the front-end. I came across a very good tried and tested CSS framework called Tailwind CSS.

Tailwind is a utility CSS framework that allows for a quick turnaround in building blocks of markup to create bespoke designs based on a library of flexible predefine CSS classes. The main benefits I found so far is that it has a surprisingly minimal footprint when building for production and many sites have pre-developed HTML components you can customise and implement on your site. Only time will tell whether this is the correct approach.

Beard Gains

Growing some facial hair wasn't an outcome to living like a hermit during these Covid times, but a requirement from my wife. My profile picture is due for an update to reflect such a change in appearance. Even I don't recognise myself sometimes.

Statistics

When it comes to site statistics, I tend to lower my expectations so I'm not setting myself up for failure when it comes to checking Google Analytics. I wasn't expecting much from this year's stats due to my lack of contribution, but suffice to say I haven’t faired too badly.

2020/2021 Comparison:

- Users: +41.09%

- Page Views: +45.56%

- New Users: +42.03%

- Bounce Rate: -3.06%

- Search Console Total Clicks: +254%

- Search Console Impressions: +295%

- Search Console Page Position: -8.3%

I'm both surprised and relieved that existing content is still getting traction resulting in more page views and users. The bounce rate has decreased a further 3.05% over last year. Out of all the statistics listed above, I believe the Google Page Position is the most important and quite disheartened that I've slipped up in this area.

To my surprise, the site search implemented earlier this year using Algolia was getting used by visitors. This was very unexpected as the primary reason why I even added a site search is mainly for my use.

One can only imagine how things could have been if I managed to be more consistent in the number of posts published over the year.

Things To Look Into In 2022

NFT and Crypto

The main thing I want to look into further is the realms of Cryptocurrency and NFT’s. I’ve been following the likes of Dan Petty and Paul Stamatiou on Twitter and has opened my eyes to how things have moved on since I last took a brief look at this space.

Holiday

I haven’t been on a holiday since my trip to the Maldives in 2019 and I’m well overdue on another one - preferably abroad if I feel safe enough to do so and COVID allowing.

Lego Ford Mustang

I purchased a Lego Creator Series Ford Mustang near the end of last year as an early Christmas present to myself and I’m still yet to complete it. I’ve gone as far as building the underlying chassis and suspension. It doesn’t even resemble a car yet. How embarrassing. :-)

On completion, it’ll make a fine centre-piece in my office.

Azure

Ever since I worked on a project at the start of the year where I was dealing with Azure Functions, deployment slots and automation I’ve been more interested in the services Azure has to offer. I’ve always stuck to the hosting related service setup and search indexing. Only ventured very little elsewhere. I’d like to keep researching in this area, especially in cognitive services.

Git On The Command-Line

Even though I’ve been using Git for as long as I’ve been working as a developer, it has always been via a GUI such as TortoiseGit or SourceTree. When it comes to interacting with a Git repo using the command-line, I’m not as experienced as I’d like to be when it comes to the more complex commands. In the past when I have used complex commands without a GUI, it’s been far more straightforward when compared to the comfort of a GUI where I naturally find myself when interacting with a repository.

Twitter Bot

For some reason, I have a huge interest in creating a Twitter bot that will carry out some form of functionality based on the contents of a tweet. At this moment in time, I have no idea what the Twitter bot will do. Once I have thought of an endearing task it can perform, the development will start.

Final Thoughts

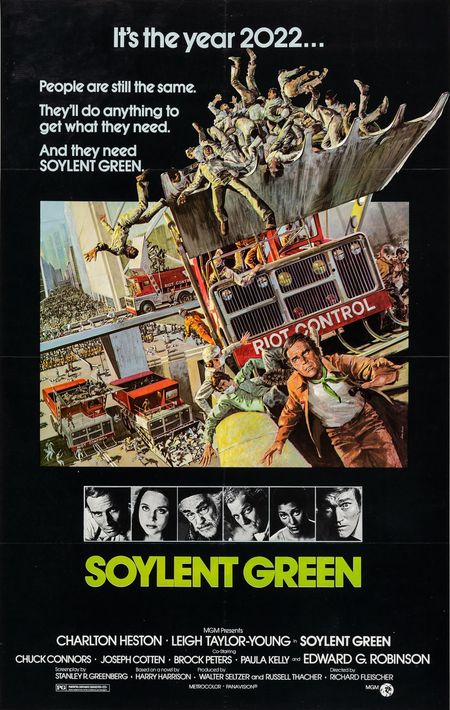

If you thought 2021 was bad enough with the continuation of the sequel no one wanted (Covid part 2), we are just days away from entering the year 2022. The year grim events took place from the fictitious film - Soylent Green.

Luckily for me, 2021 has been a productive year filled with personal and career-based accomplishments and hoping for this to continue into the new year. But I do feel it's time I pushed myself further.

I’d like to venture more into technologies that don’t form part of my existing day-to-day coding language or framework. This may make for more interesting blog posts. But to do this, I need to focus more over the next year and allocate time for research and writing.