I've worked on numerous projects that required the user to upload a single or a collection of photos that they could then manipulate in some manner, whether it was adding filtering effects or morphing their face for TV show promotion.

In any of these projects, the user's uploaded photo must be kept for a specific amount of time - long enough for the user to manipulate their image. The question that had always arisen in terms of GDPR, as well as development perspective, was: How long should the users' uploaded photos be stored?

Previously, these photos were stored in the cloud in a temporary blob storage container, with an hourly task that removed images older than 6 hours. This also ensured that the storage container remained small in size, lowering usage costs.

Then one day, it hit me... What if a user's uploaded photos could be stored locally through their own browser before any form of manipulation? Enter local storage...

What Is Local Storage?

Local storage allows data to be stored in the browser as key/value pairs. This data does not have a set expiration date and is not cleared when the browser is closed. Only string values can be stored in local storage - this will not be a problem, and we'll see in this post how we'll store a collection of images along with some data for each.

Example: Storing Collection of Photos

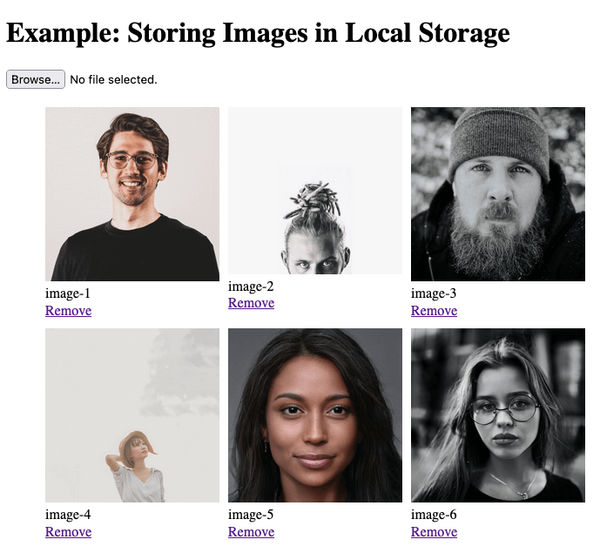

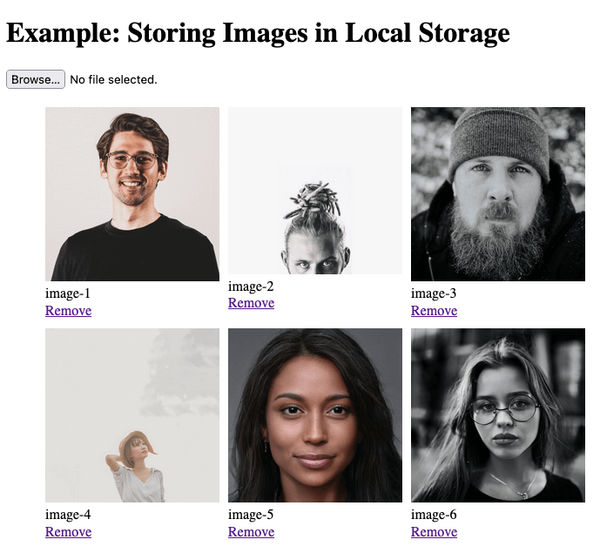

The premise of this example is to allow the user to upload a collection of photos. On successful upload, their photo will be rendered and will have the ability to remove a photo from the collection. Adding and removing a photo will also cause the browser's localStorage` to be updated.

A live demo of this page can be found on my JSFiddle account: https://jsfiddle.net/sbhomra/bts3xo5n/.

Code

HTML

<div>

<h1>

Example: Storing Images in Local Storage

</h1>

<input id="image-upload" type="file" />

<ul id="image-collection">

</ul>

</div>

JavaScript

const fileUploadLimit = 1048576; // 1MB in bytes. Formula: 1MB = 1 * 1024 * 1024.

const localStorageKey = "images";

let imageData = [];

// Render image in HTML by adding to the unordered list.

function renderImage(imageObj, $imageCollection) {

if (imageObj.file_base64.length) {

$imageCollection.append("<li><img src=\"data:image/png;base64," + imageObj.file_base64 + "\" width=\"200\" /><br />" + imageObj.name + "<br /><a href=\"#\" data-timestamp=\"" + imageObj.timestamp + "\" class=\"btn-delete\">Remove</a></li>")

}

}

// Add image to local storage.

function addImage(imageObj) {

imageData.push(imageObj);

localStorage.setItem(localStorageKey, JSON.stringify(imageData));

}

// Remove image from local storage by timestamp.

function removeImage(timestamp) {

// Remove item by the timestamp.

imageData = imageData.filter(img => img.timestamp !== timestamp);

// Update local storage.

localStorage.setItem(localStorageKey, JSON.stringify(imageData));

}

// Read image data stored in local storage.

function getImages($imageCollection) {

const localStorageData = localStorage.getItem(localStorageKey);

if (localStorageData !== null) {

imageData = JSON.parse(localStorage.getItem(localStorageKey))

for (let i = 0; i < imageData.length; i++) {

renderImage(imageData[i], $imageCollection);

}

}

}

// Delete button action to fire off deletion.

function deleteImageAction() {

$(".btn-delete").on("click", function(e) {

e.preventDefault();

removeImage($(this).data("timestamp"));

// Remove the HTML markup for this image.

$(this).parent().remove();

})

}

// Upload action to fire off file upload automatically.

function uploadChangeAction($upload, $imageCollection) {

$upload.on("change", function(e) {

e.preventDefault();

// Ensure validation message is removed (if one is present).

$upload.next("p").remove();

const file = e.target.files[0];

if (file.size <= fileUploadLimit) {

const reader = new FileReader();

reader.onloadend = () => {

const base64String = reader.result

.replace('data:', '')

.replace(/^.+,/, '');

// Create an object containing image information.

let imageObj = {

name: "image-" + ($imageCollection.find("li").length + 1),

timestamp: Date.now(),

file_base64: base64String.toString()

};

// Add To Local storage

renderImage(imageObj, $imageCollection)

addImage(imageObj);

deleteImageAction();

// Clear upload element.

$upload.val("");

};

reader.readAsDataURL(file);

} else {

$upload.after("<p>File too large</p>");

}

});

}

// Initialise.

$(document).ready(function() {

getImages($("#image-collection"));

// Set action events.

uploadChangeAction($("#image-upload"), $("#image-collection"));

deleteImageAction();

});

The key functions to look at are:

- addImage()

- removeImage()

- getImages()

Each of these functions uses JSON methods to store uploaded photos as arrays of objects. Each photo contains: name, timestamp and a base64 string. One common piece of functionality used across these functions is the use of JSON methods to help us store our collection of photos in local storage:

- JSON.stringify() - to convert an array to a string.

- JSON.parse() - to convert a JSON string into an object array for manipulation.

When saving or retrieving your saved value from local storage, a unique identifier through a "key" needs to be set. In my example, I've set the following global variable that is referenced whenever I need to use the "localStorage" methods.

const localStorageKey = "images";

When saving to localStorage, we will have to stringify our array of objects:

localStorage.setItem(localStorageKey, JSON.stringify(imageData));

Retrieving our array requires us to convert the value from a string back into an object:

imageData = JSON.parse(localStorage.getItem(localStorageKey))

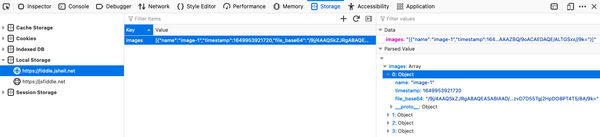

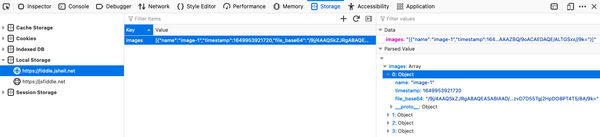

After we've uploaded some images, we can see what's stored by going into your browsers (for Firefox) Web Developer Tools, navigating to the "Storage" tab and selecting your site. If using Chrome, go to the "Applications" tab and click on "Local Storage".

Storage Limits

The maximum length of values that can be stored varies depending on the browser. The data size currently ranges between 2MB and 10MB.

When I decided to use local storage to store user photos, I was concerned about exceeding storage limits, so I set an upload limit of 1MB per photo. When I get the chance to use my code in a real-world scenario, I intend to use Hermite Resize to implement some image compression and resizing techniques.