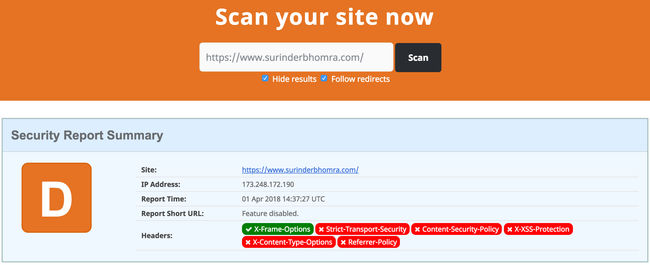

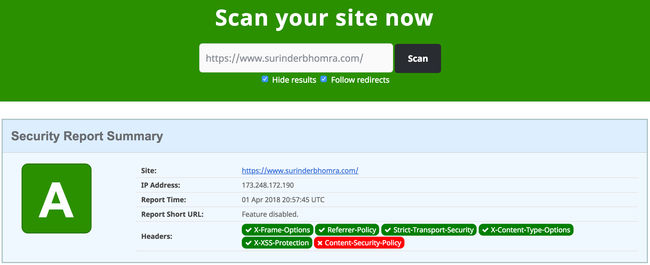

Ever since I re-developed my website in Kentico 10 using Portal Templates, I have been a bit more daring when it comes to immersing myself into the inner depths of Kentico's API and more importantly - K# macro development. One thing that has been on my list of todo's for a long time was to create a custom macro extension that would render all required META open graph tags in a page.

Adding these type of META tags using ASPX templates or MVC framework is really easy to do when you have full control over the page markup. I'll admit, I don't know if there is already an easier way to do what I am trying to accomplish (if there is let me know), but I think this macro is quite flexible with capability to expand the open graph output further.

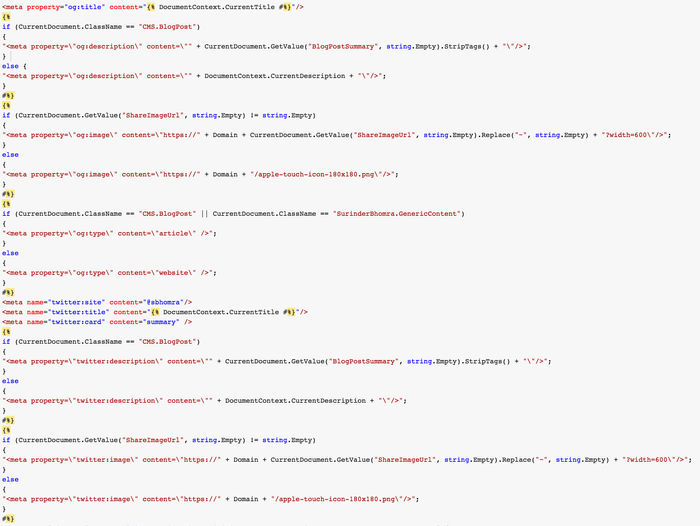

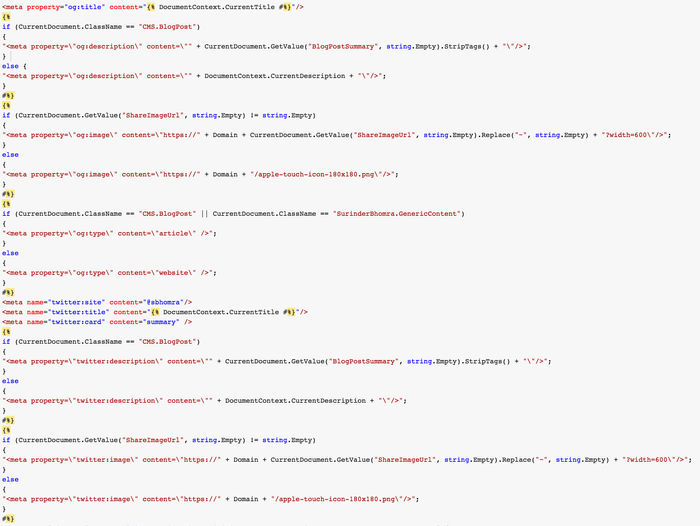

This is how I currently render the Meta HTML within my own website at masterpage level (click for a larger image):

I instantly had problems with this approach:

- The code output is a mess.

- Efficiency from a performance standpoint does not meet my expectations.

- Code maintainability is not straight-forward, especially if you have to update this code within the Page Template Header content.

CurrentDocument.OpenGraph() Custom Macro

I highly recommend reading Kentico's documentation on Registering Custom Macro Methods before adding my code. It will give you more of an insight on what can be done that my blog post alone will not cover. The implementation of my macro has been developed for a Kentico site that is a Web Application and has been added to the "Old_App_Code" directory.

// Registers methods from the 'CustomMacroMethods' container into the "String" macro namespace

[assembly: RegisterExtension(typeof(CustomMacroMethods), typeof(TreeNode))]

namespace CMSApp.Old_App_Code.Macros

{

public class CustomMacroMethods : MacroMethodContainer

{

[MacroMethod(typeof(string), "Generates Open Graph META tags", 0)]

[MacroMethodParam(0, "param1", typeof(string), "Default share image")]

public static object OpenGraph(EvaluationContext context, params object[] parameters)

{

if (parameters.Length > 0)

{

#region Parameter variables

// Parameter 1: Current document.

TreeNode tnDoc = parameters[0] as TreeNode;

// Paramter 2: Default social icon.

string defaultSocialIcon = parameters[1].ToString();

#endregion

string metaTags = CacheHelper.Cache(

cs =>

{

string domainUrl = $"{HttpContext.Current.Request.Url.Scheme}{Uri.SchemeDelimiter}{HttpContext.Current.Request.Url.Host}{(!HttpContext.Current.Request.Url.IsDefaultPort ? $":{HttpContext.Current.Request.Url.Port}" : null)}";

StringBuilder metaTagBuilder = new StringBuilder();

#region General OG Tags

metaTagBuilder.Append($"<meta property=\"og:title\" content=\"{DocumentContext.CurrentTitle}\"/>\n");

if (tnDoc.ClassName == KenticoConstants.Page.BlogPost)

metaTagBuilder.Append($"<meta property=\"og:description\" content=\"{tnDoc.GetValue("BlogPostSummary", string.Empty).RemoveHtml()}\" />\n");

else

metaTagBuilder.Append($"<meta property=\"og:description\" content=\"{tnDoc.DocumentPageDescription}\" />\n");

if (tnDoc.GetValue("ShareImageUrl", string.Empty) != string.Empty)

metaTagBuilder.Append($"<meta property=\"og:image\" content=\"{domainUrl}{tnDoc.GetStringValue("ShareImageUrl", string.Empty).Replace("~", string.Empty)}?width=600\" />\n");

else

metaTagBuilder.Append($"<meta property=\"og:image\" content=\"{domainUrl}/{defaultSocialIcon}\" />\n");

#endregion

#region Twitter OG Tags

if (tnDoc.ClassName == KenticoConstants.Page.BlogPost || tnDoc.ClassName == KenticoConstants.Page.GenericContent)

metaTagBuilder.Append("<meta property=\"og:type\" content=\"article\" />\n");

else

metaTagBuilder.Append("<meta property=\"og:type\" content=\"website\" />\n");

metaTagBuilder.Append($"<meta name=\"twitter:site\" content=\"@{Config.Twitter.Account}\" />\n");

metaTagBuilder.Append($"<meta name=\"twitter:title\" content=\"{DocumentContext.CurrentTitle}\" />\n");

metaTagBuilder.Append("<meta name=\"twitter:card\" content=\"summary\" />\n");

if (tnDoc.ClassName == KenticoConstants.Page.BlogPost)

metaTagBuilder.Append($"<meta property=\"twitter:description\" content=\"{tnDoc.GetValue("BlogPostSummary", string.Empty).RemoveHtml()}\" />\n");

else

metaTagBuilder.Append($"<meta property=\"twitter:description\" content=\"{tnDoc.DocumentPageDescription}\" />\n");

if (tnDoc.GetValue("ShareImageUrl", string.Empty) != string.Empty)

metaTagBuilder.Append($"<meta property=\"twitter:image\" content=\"{domainUrl}{tnDoc.GetStringValue("ShareImageUrl", string.Empty).Replace("~", string.Empty)}?width=600\" />");

else

metaTagBuilder.Append($"<meta property=\"twitter:image\" content=\"{domainUrl}/{defaultSocialIcon}\" />");

#endregion

// Setup the cache dependencies only when caching is active.

if (cs.Cached)

cs.CacheDependency = CacheHelper.GetCacheDependency($"documentid|{tnDoc.DocumentID}");

return metaTagBuilder.ToString();

},

new CacheSettings(Config.Kentico.CacheMinutes, KenticoHelper.GetCacheKey($"OpenGraph|{tnDoc.DocumentID}"))

);

return metaTags;

}

else

{

throw new NotSupportedException();

}

}

}

}

This macro has been tailored specifically to my site needs with regards to how I am populating the OG META tags, but is flexible enough to be modified based on a different site needs. I am carrying out checks to determine what pages are classed as "article" or "website". In this case, I am looking out for my Blog Post and Generic Content pages.

I am also being quite specific on how the OG Description is populated. Since my website is very blog orientated, there is more of a focus to populate the description fields with "BlogPostSummary" field if the current page is a Blog Post, otherwise default to "DocumentPageDescription" field.

Finally, I ensured that all article pages contained a new Page Type field called "ShareImageUrl", so that I have the option to choose a share image. This is not compulsory and if no image has been selected, a default share image you pass as a parameter to the macro will be used.

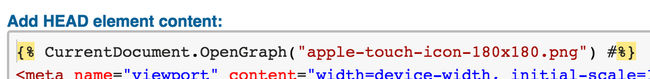

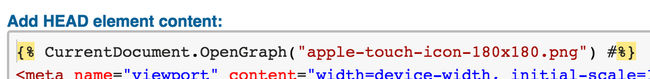

Using the macro is pretty simple. In the header section of your Masterpage template, just add the following:

As you can see, the OpenGraph() macro can be accessed by getting the current document and passing in a default share icon as a parameter.

Macro Benchmark Results

This is where things get interesting! I ran both macro implementations through Kentico's Benchmark tool to ensure I was on the right track and all efforts to develop a custom macro extension wasn't all in vain. The proof is in the pudding (as they say!).

Old Implementation

Total runs: 1000

Total benchmark time: 1.20367s

Total run time: 1.20267s

Average time per run: 0.00120s

Min run time: 0.00000s

Max run time: 0.01700s

New Implementation - OpenGraph() Custom Macro

Total runs: 1000

Total benchmark time: 0.33222s

Total run time: 0.33022s

Average time per run: 0.00033s

Min run time: 0.00000s

Max run time: 0.01560s

The good news is that the OpenGraph() macro approach has performed better over my previous approach across all benchmark results. I believe caching the META tag output is the main reason for this as well as reusing the current document context when getting page values.