I can only speak about my experiences from working in the technical industry, but there isn't a week that goes by when I am not being spammed by recruitment agencies who don't seem to get the message that I'm not interested. I can "almost" deal with the random emails I get from various agencies, but when you get targeted by a single person on a daily basis it gets infuriating!

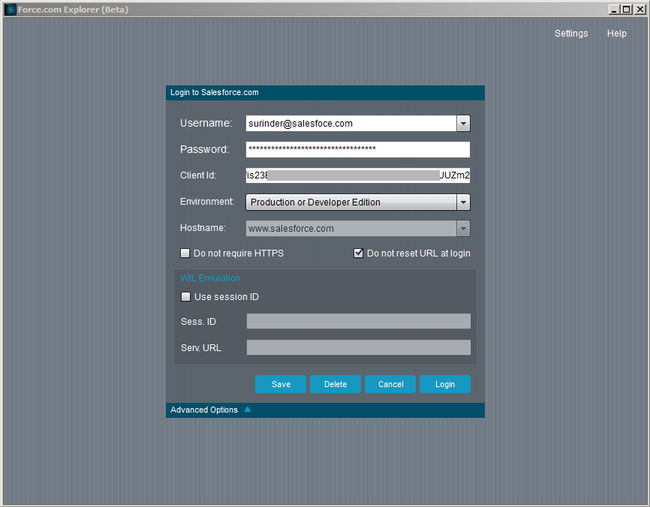

I remember back in the day when I was fresh out of University and the sense of excitement I had whenever a recruiter phoned or emailed. The awesome feeling that I was in demand! I can still remember my first job interview, where to my horror I found out that the recruiter "tweaked" my CV by adding skills that I didn't have any experience of, resulting in making me look like an absolute idiot in front of my interviewer. I thought they were my friend and only looking for my best interest. In reality, this is not the case.

As an outsider looking in, the recruitment industry seems to be a really cut-throat business where only one thing seems to matter: the numbers! Not whether a candidate is particularly right for the role. I am not tarnishing all recruiters with the same brush - there are some good guys out there, just not enough.

A little while ago, I was "tag teamed" by two recruitment agents working at the same agency within such a short space of time. What I'd like to highlight here is that I did not respond to any of their correspondence. But the messages still kept rolling in.

From Emma...

Emma sent me standard emails and was quite persistent. One brief Linkedin message accompanied by three direct emails.

From: Emma

Sent: 06 July 2016 14:37

To: 'surinder@doesntwantajob.com’

Subject: New Development Opportunities based in the Oxfordshire area!

Dear Surinder,

How are you?

I just wanted to catch up following on from my prior contact last week over LinkedIn, as mentioned I did come across your profile on LinkedIn and I would be keen to firstly introduce myself, as well as to catch up to find out if you could be open to hearing about anything new.

As mentioned in my prior email, I am also recruiting for a .Net Developer for a leading organisation based in South Oxfordshire! I would be extremely keen to discuss this position with yourself in further detail.

Please can you give me a call or drop me an email to let me know your thoughts either way. Hope to hear from you soon!

Kind Regards,

Emma

From: Emma

Sent: 12 July 2016 14:45

To: 'surinder@doesntwantajob.com’

Subject: Could you be interested in working for a leading Software House, Surinder?

Hi Surinder,

How are you?

Could you be interested in new roles at present?

If so as mentioned below, I am working on a new .Net Developer role for a leading company based in South Oxfordshire. Please can you give me a call or drop me an email through to let me know your thoughts.

I will look forwards to speaking with you soon!

Kind Regards,

Emma

From: Emma

Sent: 15 July 2016 14:14

To: 'surinder@doesntwantajob.com’

Subject: Could you be interested in working for a leading Software House, Surinder?

Hi Surinder,

How are you?

Are you on the look out for new roles?

If so as mentioned below, I am working on a new .Net Developer role for a leading company based in South Oxfordshire. Please can you give me a call or drop me an email through to let me know your thoughts.

I will look forwards to speaking with you soon!

Kind Regards,

Emma

From Becky...

Now Becky cranked things up a notch or two. She was determined to get my attention and very persistent, I'll give her that. Her strategy consisted of Linkedin messages, following me on Twitter and (like Emma) send me a few emails.

From: Becky

Sent: 07 August 2015 11:37

To: 'surinder@doesntwantajob.com’

Subject: Could you be interested?

Hi Surinder,

I’ve come across your profile on LinkedIn and it made me think you could possibly be interested in a Web Developer opportunity that I’m currently recruiting for, based at a leading company in West Oxfordshire.

I appreciate that you may not be actively looking at the moment, but I can see that you have been at for over 5 years now, so I wanted to approach you about this as I thought you could possibly be interested in a change?

I’ve included some more information about the role attached.

<Omitted Job Description>

I can see you’re working in an agency environment at the moment at <Omitted Company>, which is obviously great for the variety of sites you get to work on. This role will give you that opportunity as well, but with the chance to engage more with the projects your working on, having a deeper involvement in the entire process.

You will get to work with the latest versions of ASP.Net and C#. My client also gives you full access to Pluralsight. On top of all this, the working environment is the most beautiful in Oxfordshire and there are excellent environmental benefits. The salary is up to <Omitted Salary>.

That was the first email. A standard recruiters email, but went the extra mile to personalise things based on my current experience. The second email gets a little further to the point and I start to smell the sense of desperation.

From: Becky

Sent: 11 August 2015 12:34

To: 'surinder@doesntwantajob.com’

Subject: Could you be interested?

Hi Surinder,

I just wanted to send you another email following up on my previous one below, regarding a Web Developer opportunity I am currently recruiting for based in West Oxfordshire. I have attached some more information for you to the email.

I’d really like the opportunity to chat to you about this opportunity, as I think it could be a really great fit for you. The company are a great one to work for – they offer you fantastic environmental benefits, a beautiful working space, plus are keen to create an interesting and productive environment for developers with full access to Pluarlsight and the latest versions of ASP.Net and C#.

If you are interested in discussing this with me further it would be great if you could get back to me! However, as I said in my previous email, if you’re not interested n pursuing new opportunities then please do just let me know and I shall remove you from our mailing list right away.

Kind Regards

Becky

I think by the the third and final email, Becky finally got the message and knew I wasn't going to take the bait. But admirably tries to get something out of it by asking if I know of anyone else interested in the position she is offering.

From: Becky

Sent: 13 August 2015 14:10

To: 'surinder@doesntwantajob.com’

Subject: Could you be interested?

Hi Surinder,

I just wanted to send you a final email about the role below and attached to this email. I think with your experience at <Omitted Company> you would be a great fit for it, so I would love to have a chat with you about it if you think you could be interested. However, if you’re not looking for a new role – seeing as you are an expert in this field – would you know anyone else with your skill set who may be interested in this position? If so, please do not hesitate to pass on my details!

Kind Regards

Becky

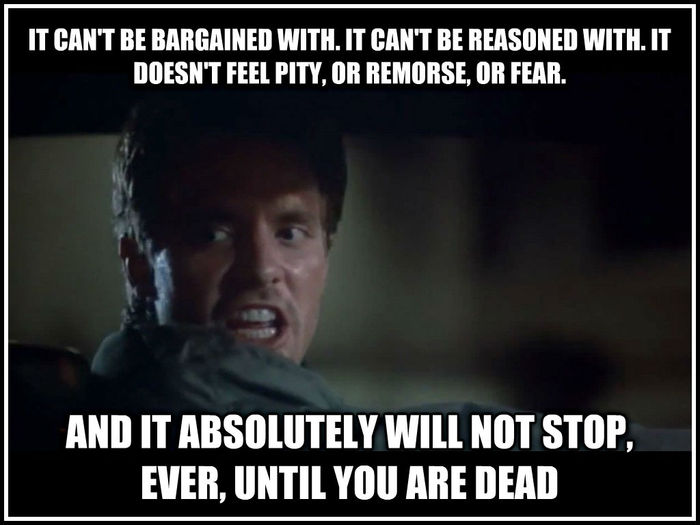

Is it acceptable to go to these great lengths to get someones attention? A single email alone should suffice. I understand they have a job to do, but do they really think this approach works? They're clearly not engaging candidates in the right way. I truly question the mentality here. Recruiters remind me of Terminators...just without the killing part.

Doesn't sound hopeful does it? But there are two things you can do to lessen the headache and make you less of a target and at the same time, still keep in the loop (if you feel ever so inclined) with the good opportunities that may arise:

- First and foremost do NOT ever respond! Even if it is to tell them you're not interested. Soon as they know the email address is active and see signs of life, you'll never get them to leave you alone.

- If you want to enquire about a position via a recruitment agent, use a different contact email address. At least you can ditch it at times of need.

Loved reading this article titled: Stop The Recruiting Spam. Seriously.. An inciteful read covering some really good points on the state of the recruitment industry.

Note to my current employment and any recruiters: I'm happy where I am.