Generate Google Sitemap From A List of Url’s In A Text File

I had around 2000 webpage URL’s listed in a text file that needed to be generated into a simple Google sitemap.

I decided to create a quick Google Sitemap generator console application fit for purpose. The program iterates through each line of a text file and parses it to a XmlTextWriter to create the required XML format.

Feel free to copy and make modifications to the code below.

Code:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.IO;

using System.Xml;

namespace GoogleSitemapGenerator

{

class Program

{

static void Main(string[] args)

{

string textFileLocation = String.Empty;

if (args != null && args.Length > 0)

{

textFileLocation = args[0];

}

if (!String.IsNullOrEmpty(textFileLocation))

{

string fullSitemapPath = String.Format("{0}sitemap.xml", GetCurrentFileDirectory(textFileLocation));

//Read text file

StreamReader sr = File.OpenText(textFileLocation);

using (XmlTextWriter xmlWriter = new XmlTextWriter(fullSitemapPath, Encoding.UTF8))

{

xmlWriter.WriteStartDocument();

xmlWriter.WriteStartElement("urlset");

xmlWriter.WriteAttributeString("xmlns", "http://www.sitemaps.org/schemas/sitemap/0.9");

while (!sr.EndOfStream)

{

string currentLine = sr.ReadLine();

if (!String.IsNullOrEmpty(currentLine))

{

xmlWriter.WriteStartElement("url");

xmlWriter.WriteElementString("loc", currentLine);

xmlWriter.WriteElementString("lastmod", DateTime.Now.ToString("yyyy-MM-dd"));

//xmlWriter.WriteElementString("changefreq", "weekly");

//xmlWriter.WriteElementString("priority", "1.0");

xmlWriter.WriteEndElement();

}

}

xmlWriter.WriteEndElement();

xmlWriter.WriteEndDocument();

xmlWriter.Flush();

if (File.Exists(fullSitemapPath))

Console.Write("Sitemap successfully created at: {0}", fullSitemapPath);

else

Console.Write("Sitemap has not been generated. Please check your text file for any problems.");

}

}

else

{

Console.Write("Please enter the full path to where the text file is situated.");

}

}

static string GetCurrentFileDirectory(string path)

{

string[] pathArr = path.Split('\\');

string newPath = String.Empty;

for (int i = 0; i < pathArr.Length - 1; i++)

{

newPath += pathArr[i] + "\\";

}

return newPath;

}

}

}

I will be uploading a the console application project including the executable shortly.

If I need to login and authenticate a Facebook user in my ASP.NET website, I either use the Facebook Connect's JavaScript library or

If I need to login and authenticate a Facebook user in my ASP.NET website, I either use the Facebook Connect's JavaScript library or  Ever since I decided to expand my online presence, I thought the best step would be to have a better domain name. My current domain name is around twenty-nine characters in length. Ouch! So I was determined to find another name that was shorter and easier to remember.

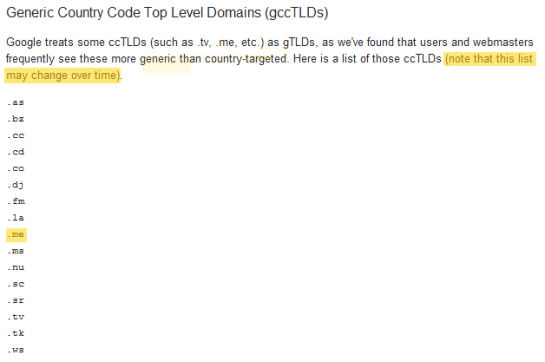

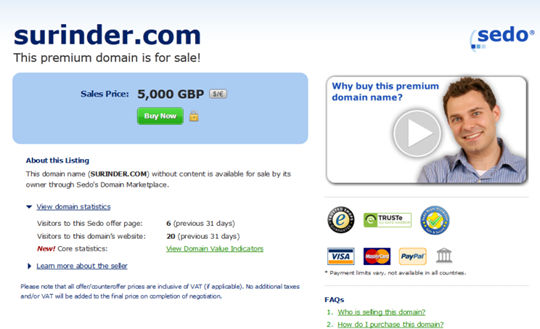

Ever since I decided to expand my online presence, I thought the best step would be to have a better domain name. My current domain name is around twenty-nine characters in length. Ouch! So I was determined to find another name that was shorter and easier to remember.

…if you want a true Android experience.

…if you want a true Android experience.