It's not often you happen to stumble across a piece of code written around nine or ten years ago with fond memories. For me, it's a jQuery Countdown timer I wrote to be used in a quiz for a Sky project called The British at my current workplace - Syndicut.

It is only now, all these years later I've decided to share the code for old times sake (after a little sprucing up).

This countdown timer was originally used in quiz questions where the user had a set time limit to correctly answer a set of multiple-choice questions as quickly as possible. The longer they took to respond, the fewer points they received for that question.

If the selected answer was correct, the countdown stopped and the number of points earned and time taken to select the answer was displayed.

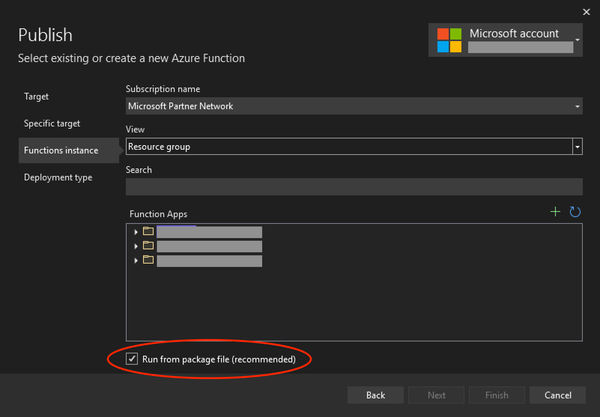

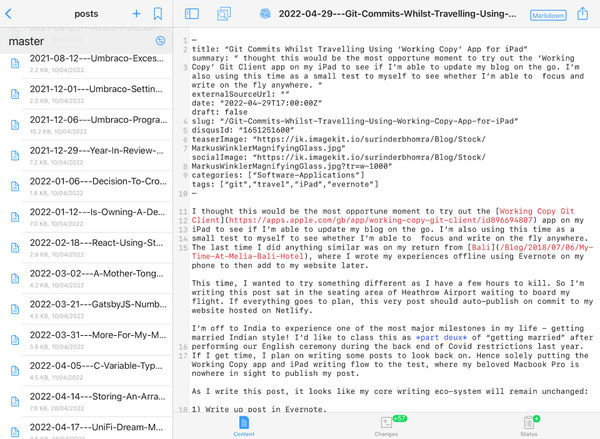

Demonstration of the countdown timer in action:

Of course, the version used in the project was a lot more polished.

Code

JavaScript

const Timer = {

ClockPaused: false,

TimerStart: 10,

StartTime: null,

TimeRemaining: 0,

EndTime: null,

HtmlContainer: null,

"Start": function(htmlCountdown) {

Timer.StartTime = (new Date()).getTime() - 0;

Timer.EndTime = (new Date()).getTime() + Timer.TimerStart * 1000;

Timer.HtmlContainer = $(htmlCountdown);

// Ensure any added styles have been reset.

Timer.HtmlContainer.removeAttr("style");

Timer.DisplayCountdown();

// Ensure message is cleared for when the countdown may have been reset.

$("#message").html("");

// Show/hide the appropriate buttons.

$("#btn-stop-timer").show();

$("#btn-start-timer").hide();

$("#btn-reset-timer").hide();

},

"DisplayCountdown": function() {

if (Timer.ClockPaused) {

return true;

}

Timer.TimeRemaining = (Timer.EndTime - (new Date()).getTime()) / 1000;

if (Timer.TimeRemaining < 0) {

Timer.TimeRemaining = 0;

}

//Display countdown value in page.

Timer.HtmlContainer.html(Timer.TimeRemaining.toFixed(2));

//Calculate percentage to append different text colours.

const remainingPercent = Timer.TimeRemaining / Timer.TimerStart * 100;

if (remainingPercent < 15) {

Timer.HtmlContainer.css("color", "Red");

} else if (remainingPercent < 51) {

Timer.HtmlContainer.css("color", "Orange");

}

if (Timer.TimeRemaining > 0 && !Timer.ClockPaused) {

setTimeout(function() {

Timer.DisplayCountdown();

}, 100);

}

else if (!Timer.ClockPaused) {

Timer.TimesUp();

}

},

"Stop" : function() {

Timer.ClockPaused = true;

const timeTaken = Timer.TimerStart - Timer.TimeRemaining;

$("#message").html("Your time: " + timeTaken.toFixed(2));

// Show/hide the appropriate buttons.

$("#btn-stop-timer").hide();

$("#btn-reset-timer").show();

},

"TimesUp" : function() {

$("#btn-stop-timer").hide();

$("#btn-reset-timer").show();

$("#message").html("Times up!");

}

};

$(document).ready(function () {

$("#btn-start-timer").click(function () {

Timer.Start("#timer");

});

$("#btn-reset-timer").click(function () {

Timer.ClockPaused = false;

Timer.Start("#timer");

});

$("#btn-stop-timer").click(function () {

Timer.Stop();

});

});

HTML

<div id="container">

<div id="timer">

-.--

</div>

<br />

<div id="message"></div>

<br />

<button id="btn-start-timer">Start Countdown</button>

<button id="btn-stop-timer" style="display:none">Stop Countdown</button>

<button id="btn-reset-timer" style="display:none">Reset Countdown</button>

</div>

Final Thoughts

When looking over this code after all these years with fresh eyes, the jQuery library is no longer a fixed requirement. This could just as easily be re-written in vanilla JavaScript. But if I did this, it'll be to the detriment of nostalgia.

A demonstration can be seen on my jsFiddle account.